Call for Abstract

Scientific Program

5th World Machine Learning and Deep Learning Congress, will be organized around the theme “Machine Learning: Discovering the New Era of Intelligence”

Machine Learning 2018 is comprised of 19 tracks and 26 sessions designed to offer comprehensive sessions that address current issues in Machine Learning 2018.

Submit your abstract to any of the mentioned tracks. All related abstracts are accepted.

Register now for the conference by choosing an appropriate package suitable to you.

Machine Learning is a subset of Artificial Intelligence (AI) that provides computers with the ability to learn without being explicitly programmed and to take intelligent decisions. It also enables machines to grow and improve with experiences.

It has various applications in science, engineering, finance, healthcare and medicine. Some applications of Machine Learning are given below.

Applications of Machine Learning:

o Predictive maintenance or condition monitoring

o Warranty reserve estimation

o Propensity to buy

o Demand forecasting

o Process optimization

o Telematics

- Retail

o Predictive inventory planning

o Recommendation engines

o Upsell and cross-channel marketing

o Market segmentation and targeting

o Customer ROI and lifetime value

- Healthcare and Life Sciences

o Alerts and diagnostics from real-time patient data

o Disease identification and risk stratification

o Patient triangle optimization

o Proactive health management

o Healthcare provider sentiment analysis

- Travel and Hospitality

o Aircraft scheduling

o Dynamic pricing

o Social media-consumer feedback and interaction analysis

o Customer complaint resolution

o Traffic patterns and congestion management

- Financial Services

o Risk analytics and regulation

o Customer Segmentation

o Cross selling and up selling

o Sales and marketing campaign management

o Credit worthiness evaluation

- Energy, Feedstock and Utilities

o Power Usage analytics

o Seismic data processing

o Carbon emission and trading

o Customer-specific pricing

o Smart grid management

o Energy demand and supply optimization

Advantages of Machine Learning-

- Useful where large scale data is available

- Large scale deployments of Machine Learning beneficial in terms of improved speed and accuracy

- Understands non-linearity in the data and generates a function mapping input to output (Supervised Learning)

- Recommended for solving classification and regression problems

- Ensures better profiling of customers to understand their needs

- Helps serve customers better and reduce attrition

And many more………

Deep Learning is a subset of Machine Learning which deals with deep neural networks. It is based on a set of algorithms that attempt to model high-level abstractions in data by using multiple processing layers, with complex structures or otherwise, composed of multiple non-linear transformations.

There are 4 major types of Deep Learning:

- Unsupervised Pretrained Networks (UPNs)

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks

- Recursive Neural Networks

Artificial Intelligence is a technique which enables computers to mimic human behavior. In other words, it is the area of computer science that emphasizes the creation of intelligent machines that work and reacts like humans. Following are the subsets of Artificial Intelligence –

- Machine Learning

o Deep Learning

o Predictive Analytics

- Natural Language Processing (NLP)

o Translation

o Classification & Clustering

o Information Extraction

- Speech

o Speech to Text

o Text to Speech

- Expert Systems

- Planning, Scheduling & Optimization

- Robotics

- Vision

o Image Recognition

o Machine Vision

Types of Artificial Intelligence:

- Narrow Artificial Intelligence - Narrow artificial intelligence is also known as weak AI. It is an artificial intelligence that mainly focuses on one narrow task. Narrow AI is defined in contrast to either strong AI or artificial general intelligence. All currently existing systems consider artificial intelligence of any sort is weak AI at most. It is commonly used in sales predictions, weather forecasts & playing games. Computer vision & Natural Language Processing (NLP) is also a part of narrow AI. Google translation engine is a good example of narrow Artificial Intelligence.

- Artificial General Intelligence

- Artificial Super Intelligence

Human brain has neurons that help in adaptability, learning ability & to solve any problem. Unlike Human brain, computer scientists dreamt for computers to solve the perceptual problems that fast. And hence, ANN model came into existence. Artificial Neural Networks is nothing but a biologically inspired computational model that consists of processing elements (neurons) and connections between them, as well as of training and recall algorithms. There are many types of Neural Networks:

- Feed-forward and neural network

- Radial basis function (RBF) network

- Kohonen self-organizing network

- Learning vector quantization

- Recurrent neural network

- Modular neural networks

- Physical neural networks

- Other types of networks

The Internet of things (IoT) refers to an umbrella that covers the entire network of physical devices, home appliances, vehicles and other items embedded with software, sensors, actuators, electronics and connectivity, or we can say with an IP address (Internet Protocol), which enables these objects to connect and exchange data, which resulting in enhanced efficiency, accuracy and economic advantage in addition to reduced human involvement.

The Internet of Things: From connecting devices to human value

- Device Connection

- IoT Devices

- IoT Connectivity

- Embedded Intelligence

- Data Sensing

- Capture Data

- Sensors and tags

- Storage

- Communication

- Focus on Access

- Networks, Cloud, Edge

- Data Transport

- Data Analytics

- Big Data Analytics

- AI & Cognitive

- Analysis at the Edge

- Data Value

- Analysis to Action

- APIs and Responses

- Actionable Intelligence

- Human Value

- Smart Applications

- Stakeholders Benefits

- Tangible Benefits

The process of globalization has turn the whole world into a global village where everyone is interconnected and interdependent. To a large extent the development of earth will not be possible without Internet of Things. The emergence of the Internet of Things (IoT) era brought new hope and promised a better future. The interconnection between the IoT and globalization will be the focus of the session.

- Track 5-1Internet censorship

- Track 5-2Internet activism

- Track 5-3Hybrid Cloud

- Track 5-4Net neutrality

- Track 5-5Cyber attack

- Track 5-6Globalization and governance

- Track 5-7Block chain & Bitcoin

- Track 5-8Global Citizen have no Privacy

- Track 5-9How IoT Helps to Feed the World

Deep Learning is able to solve more complex problems and perform greater tasks. Deep Learning Framework is an essential supporting fundamental structure that helps to make complexity of DL little bit easier. There are top 10 deep learning frameworks:

- Track 6-1Tensorflow

- Track 6-2Theano

- Track 6-3Keras

- Track 6-4Caffe

- Track 6-5PyTorch

- Track 6-6Deeplearning4j

- Track 6-7MXNet

- Track 6-8Microsoft Cognitive Toolkit

- Track 6-9Lasagne

- Track 6-10BIgDL

Machine learning works effectively in the presence of huge data. Medical science is yielding large amount of data daily from research and development (R&D), physicians and clinics, patients, caregivers etc. These data can be used as synchronizing the information and using it to improve healthcare infrastructure and treatments. This has potential to help so many people, to save lives and money. As per a research, big data and machine learning in pharma and medicine could generate a value of up to $100B annually, based on better decision-making, optimized innovation, improved efficiency of research/clinical trials, and new tool creation for physicians, consumers, insurers and regulators.

Applications of Machine Learning in Medical Science-

- Track 7-1Disease Identification/Diagnosis

- Track 7-2Personalized Treatment/Behavioral Modification

- Track 7-3Drug Discovery/Manufacturing

- Track 7-4Clinical Trial Research

- Track 7-5Smart Electronic Health Records

- Track 7-6Epidemic Outbreak Prediction

- Track 7-7Radiology and Radiotherapy

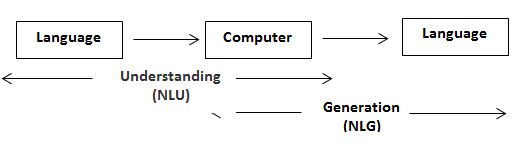

Natural Language Processing (NLP) is a sub-set of artificial intelligence that focuses on system development that allows computers to communicate with people using everyday language. Natural language generation system converts information from computer database into readable human language and vice versa.

The field of NLP is divided in 2 categories:-

1. Natural Language Understanding (NLU)

2. Natural Language Generation (NLG)

Areas in Natural Language Processing –

- Morphology

- Grammar & Parsing (syntactic analysis)

- Semantics

- Pragmatics

- Discourse / Dialogue

- Spoken Language Understanding

Areas in Speech Recognition –

- Signal Processing

- Phonetics

- Word Recognition

Computer Vision is a sub branch of Artificial Intelligence whose goal is to give computers the powerful facility for understanding their surrounding by seeing the things more than hearing or feeling, just like humans.

Applications of Computer Vision:

- Controlling processes

- Navigation

- Automatic inspection

- Organizing information

- Modeling objects or environments

- Detecting events

- Recognize objects

- Locate objects in space

- Recognize actions

- Track objects in motion

Pattern Recognition is a classification of Machine Discovering that predominantly concentrates on the acknowledgment of the structure and regularities in detail; however it is considered almost similar to machine learning. Pattern Recognition has its cause from engineering, and the term is known with regards to Computer vision. Pattern Recognition for the most part has better enthusiasm to formalize, illuminate and picture the pattern and give the last outcome, while machine learning customarily concentrates on expanding the recognition rates before giving the last yield. Pattern Recognition algorithms normally mean to give a reasonable response to every single input and to perform in all probability coordinating of the data sources, taking into charge their statistical variety. There are various uses of Pattern Recognition. Some of those are:

- In Medical Science, pattern recognition is the basis for computer-aided diagnosis (CAD) that describes a procedure that supports the doctor’s interpretations and findings.

- Automatic Speech Recognition

- Classification of text into several categories (spam/non spam email messages)

- The automatic recognition of handwritten postal codes on postal envelopes

- Automatic recognition of images of human faces

- Handwriting image extraction from medical forms

- Optical character recognition

Pattern recognition can be utilized for at least 3 sorts of problems: multi-class arrangement, two-class arrangement (binary) and one-class (irregularity recognition commonly). Algorithms for pattern recognition rely upon the kind of label output, on in the case of learning is supervised or unsupervised, and on whether the algorithm is statistical or non-statistical in nature. Some algorithms that can be used for problem solving are:

- Decision Tree

- LDA/QDA

- Bayes

- K-means

- Networks (of any kind)

- Reinforced learning

The use of machines in the public has expanded widely in the most recent decades. These days, machines are utilized as a part of a wide range of businesses. As their introduction with people increment, the communication additionally needs to wind up smoother and more characteristic. Keeping in mind the end goal to accomplish this, machines must be given an ability that let them get it the encompassing condition. Exceptionally, the intentions of a person. At the point when machines are eluded, this term includes to computers and robots.

During the development of this work, deep learning techniques have been used over images displaying the following facial emotions: happiness, sadness, anger, surprise, disgust, and fear. In this work, two independent methods proposed for this very task.

- The first method uses auto encoders to construct a unique representation of each emotion.

- The second method is an 8-layer convolutional neural network (CNN).

Predictive Analytics is the branch of advanced analytics which offers a clear view of the present and deeper insight into the future. It uses different techniques and algorithms from statistics and data mining, to analyze current and historical data to predict the outcome of future events and interactions.

Processes included in Predictive Analytics are:

- Define Project

- Data Collection

- Data Analysis

- Statistics

- Modeling

- Deployment

- Model Monitoring

There are many applications of Predictive Analytics. Few of them are:

- Customer Relationship Management (CRM)

- Collection Analytics

- Fraud Detection

- Cross Sell

- Direct Marketing

- Risk Management

- Underwriting

- Health Care

Predictive Analytics plays a very strong role in Industry Applications like:

- Predictive Analytics Software

- Predictive Analytics Software API

- Predictive Analytics Programs

- Predictive Lead Scoring Platforms

- Predictive Pricing Solutions

- Customer Churn, Renew, Upsell, Cross Sell Software Tools

Nowadays, a huge quantity of data is being produced daily. Machine Learning uses those data and provides a noticeable output that can add value to the organization and will help to increase ROI,

Big Data is informational indexes that are so voluminous and complex that conventional data handling application programming are lacking to manage them. Big Data challenges incorporate capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, and updating and data security. There are three dimensions to Big Data known as Volume, Variety and Velocity.

Data Science manages both structured and unstructured data. It is a field that incorporates everything that is related with the purging, readiness and last investigation of data. Data science consolidates the programming, coherent thinking, arithmetic and statistics. It catches information in the keenest ways and supports the capacity of taking a gander at things with an alternate point of view.

Data mining is essentially the way toward collecting information from gigantic databases that was already immeasurable and obscure and after that utilizing that information to settle on applicable business choices. To put it all the more essentially, Data mining is an arrangement of different techniques that are utilized as a part of the procedure of learning disclosure for recognizing the connections and examples that were beforehand obscure. We can thusly term data mining as a juncture of different fields like artificial intelligence, data room virtual base management, pattern recognition, visualization of data, machine learning, and statistical studies and so on.

Big Data Analytics gives a handful of usable data after examining hidden patterns, correlations and other insights from a large amount of data. That as a result, leads to smarter business moves, higher profits, more efficient operations and finally happy customers. It adds value to the organization in following ways:

- Cost reduction

- Faster, Better decision making

- New Products and Services

Big data analytics technologies and tools:

- YARN: a cluster management technology and one of the key features in second-generation Hadoop.

- Spark: an open-source parallel processing framework that enables users to run large-scale data analytics applications across clustered systems.

- Hive: an open-source data warehouse system for querying and analyzing large datasets stored in Hadoop files.

- Kafka: a distributed publish-subscribe messaging system designed to replace traditional message brokers.

- MapReduce: a software framework that allows developers to write programs that process massive amounts of unstructured data in parallel across a distributed cluster of processors or stand-alone computers.

- Pig: an open-source technology that offers a high-level mechanism for the parallel programming of MapReduce jobs to be executed on Hadoop clusters.

- HBase: a column-oriented key/value data store built to run on top of the Hadoop Distributed File System (HDFS).

In Machine Learning, when machine captures data, they find random data. Then machine learning uses dimensionality reduction or dimension reduction is the process for reducing the number of random variables under consideration by obtaining a set of principal variables. It can be divided into feature selection and feature extraction. It can be further divided into 5 types.

- Principal component analysis (PCA)

- Kernel PCA

- Graph-based kernel PCA

- Linear Discriminant Analysis (LDA)

- Generalized discriminant analysis (GDA)

Model Selection is the undertaking of choosing a statistical model from an arrangement of candidate models, given information. In the least difficult cases, a prior arrangement of information is considered. However, the assignment can likewise include the outline of trials with the end goal that the information gathered is appropriate to the problem of model selection. Given candidate models of comparable prescient or illustrative power, the least complex model is well on the way to be the best decision

Boosting is a machine learning ensemble meta- algorithm for essentially lessening inclination, and furthermore changes in supervised learning, and a group of machine learning algorithms which change over weak learners to strong ones. A weak learner is characterized to be a classifier which is just marginally related with the genuine characterization (it can name cases superior to anything irregular speculating). Conversely, a strong learner is a classifier that is subjectively all around connected with the genuine classification.

Types of Boosting Algorithms are:

1. AdaBoost (Adaptive Boosting)

2. Gradient Tree Boosting

3. XGBoost

Object detection with digits is a piece of Deep Learning. It is a standout amongst the most difficult issues in computer vision and is the initial phase in a several computer vision applications. The objective of an object detection system is to recognize all examples of objects of a known classification in a picture.

Cloud Computing is a delivery model of computing services over the internet. It enables real time development, deployment and delivery of broad range of products, services and solutions. It is built around a series of hardware and software that can be remotely accessed through any web browser. Generally documents and programming is shared and dealt with by numerous clients and all information is remotely brought together as opposed to being put away on clients' hard drives.

- Core Cloud Services

- Cloud Technologies

- On-Demand Computing Models

- Client-Cloud Computing Challenges

Cloud Computing has 3 service categories:

- SaaS (Software as a service)

- PaaS (Platform as a service)

- IaaS (Infrastructure as a service)

There are few Pros of Cloud Computing:

- Scale and cost

- Choice and Agility

- Encapsulated Change Management

- Nest Generation Architectures

Few Cons of Cloud Computing are as given below:

- Lock-in to service

- Security (Hacking)

- Lack of Control and Ownership

- Reliability

Robotic Automation lets organizations automate current tasks as if a real person was doing them across applications and systems. RPA is a cost cutter and a quality accelerator. Therefore RPA will directly impact OPEX and customer experience, and benefit to the whole organization.

Benefits of Robotic Process Automation (RPA) –

- Customer flexibility, response time, accuracy, experience will increase.

- Staff of a company can add more value to the organization. Their loyalty will enhance along with their engagement with the employees.

- At last the company will get benefited with respect to profitability, consistency, growth and agility